Cloud Cost Savings using ECL Watch

Since joining the HPCC Systems platform team with the LexisNexis Risk Solutions Group in 2015, Shamser Ahmed has been working as a Senior Software Engineer primarily on the code generator, Thor, ROXIE and statistical improvements for monitoring purposes. Solving real world problems is at the forefront of his efforts. One of those problems is the cost of running data on the cloud. From HPCC Systems 8.6.0, features have been added to help track and reduce costs.

In this blog, Shamser covers the most efficient ways to track and trend cloud usage costs, as well as how to set configuration parameters.

***********************************************

One of the benefits of moving to the cloud is that resources can be dynamically provisioned, but it also introduces the potential of incurring unexpected costs. ECL developers are in the forefront of controlling those costs because the cost of providing a service is impacted by the design of ECL queries and the design of datasets (including indexes). ECL developers need suitable cost information to make design choices that achieve an acceptable balance between performance vs cost vs developer time. HPCC Systems 8.6.0, includes a number of new features and enhancements providing ECL developers, managers and DevOps access to the following information which will help with making cost efficient design choices:

- Assess the costs of providing a service and identify where the costs are incurred when providing that service

- Identify the most appropriate storage for a logical file (performance vs hot vs cold storage)

- Balance spin-up time vs idle cost

- Design the infrastructure, such as the sizing of the cluster, the specification of the hardware, networking, use of child processes vs pods etc

- Decide which areas to focus on for improvement, for example, identifying high-cost areas

- Ensure that the costs of executing a job and the cost of storing the associated data are in line with expectations

- Highlight potential issues from unusual cost changes

- Identify wasteful or unnecessary storage and jobs

- Compare the cost of in-prem DC with the cost hosting in the cloud

This cost information may also be used by HPCC Systems platform developers to identify possible opportunities for improvement within the platform itself.

The three types of costs that are currently being tracked are:

- Execution Cost

- Storage Cost

- File Access Cost

Let’s take a more in-depth look into these types of costs and how to manage them.

Execution Cost

Execution Cost is the cost of executing the workunit, graph and subgraphs on the Thor cluster. It includes the cost of all the nodes directly required to execute the job and includes the cost of:

- Worker nodes

- Compiler nodes

- Agent nodes and the manager node

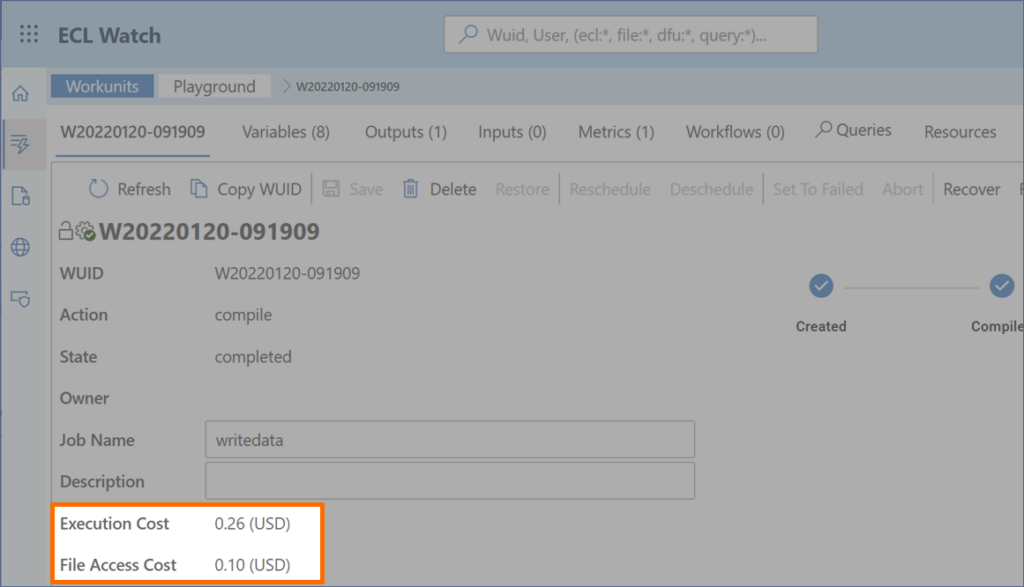

A workunit’s execution cost value is displayed in its summary page and is broken down at the graph, subgraph and activity level. The graph and subgraph cost values are available in the metrics and graph viewer.

Note: The execution cost of ROXIE workunits is not currently implemented.

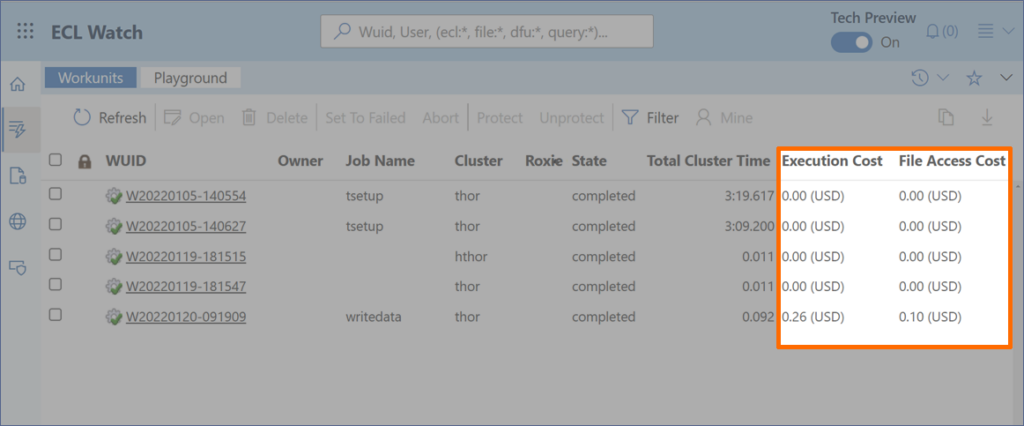

Two new columns have been added to the Workunit’s table in ECL Watch to show the cost values related to workunits, which may be sorted by either cost column to find the most expensive jobs.

The costs are also shown on the Workunits summary page in ECL Watch:

Job Guillotine

The risk of runaway costs is a concern with uncapped usage-based charging. The job guillotine feature has been provided to manage this risk by setting a limit on the costs that a job may incur.

Note: This feature is supported for Thor jobs only.

Storage Cost

This is the cost of hosting the data in the storage plane. It does not include the cost of data operation such as read or write costs.

Note: No costs are recorded for temporary or spill files, because the local storage is included in the price of the VM used to calculate the execution costs.

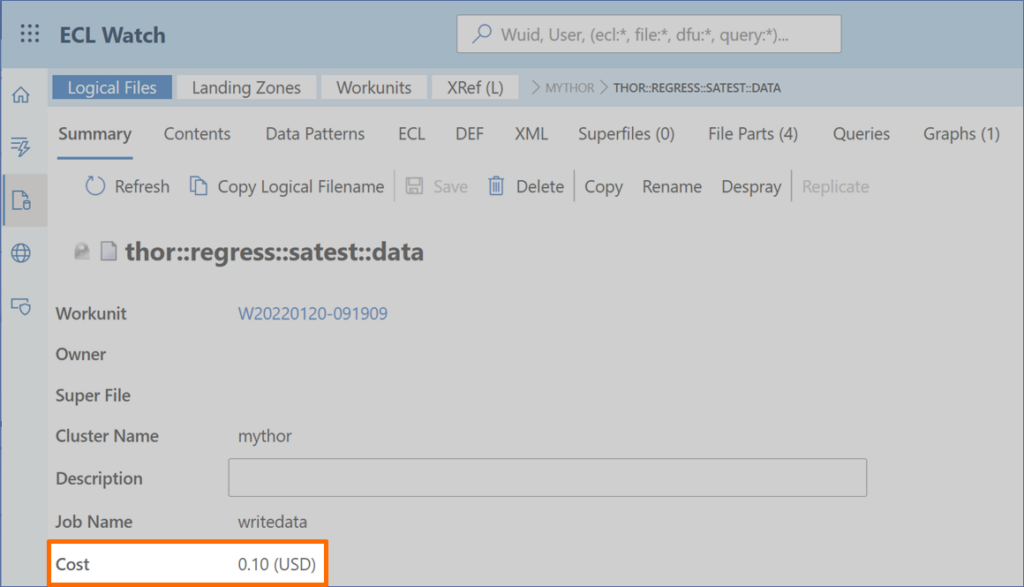

At present, the storage costs cannot be viewed as a separate value in ECL Watch. They can only be viewed as part of a cost field in a logical file’s summary page. The cost field includes other file related costs.

File Access Cost

Many storage planes have a separate charge for data operations. The cost of reading and writing to the file will be included in the file access cost value. Any other cost associated with operations (such as delete or copy) will not be tracked or included as part of file access cost at this time.

The file access cost is currently shown as part of the cost field in the Logical File’s summary page in ECL Watch. But planned improvements for future versions include the replacement of the cost field with two new fields showing the at rest cost and the file access cost as separate values.

The costs incurred by a workunit for accessing logical files is also recorded in the workunit’s statistics and attributes. The read/write cost is recorded at the activity record and cumulated at the graph, the subgraph and the workflow scope level. The total file access cost for a workunit is recorded with the workunit and displayed in the summary page.

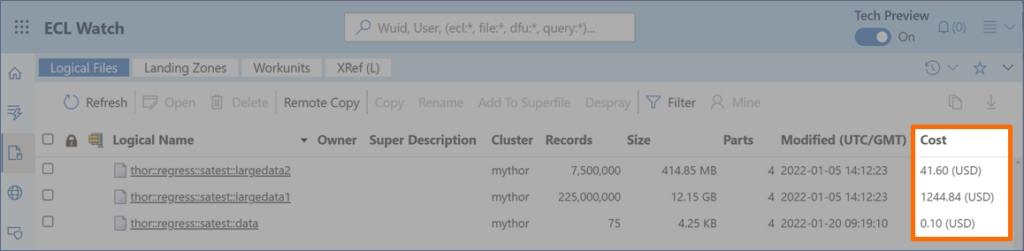

The Logical Files table in ECL Watch has been updated to show the costs incurred for storing and accessing logical files:

The new cost field is shown in the Logical File summary page. It is the combined cost of storing and accessing the data.

Note: This new cost field is likely to be replaced by two new fields to show at rest cost and access costs separately.

The cost information is currently only generated for Thor and hThor jobs.

Caveats

All the cost values calculated are approximate. They are intended be used only as a guide. Inaccuracies may result from any of the following:

- Not tracking file access from outside platform

- Not tracking file operations other than read/write operations

- Caching by NFS or OS

- Under-utilized nodes

- Resources shared between jobs – for example, re-use of a compiler node

- Any other unknowns

Setting Configuration Parameters for the HPCC Systems Cloud Native Platform

The configuration provides the pricing information and currency formatting information. The following configuration parameters are supported:

- currencyCode – Used for currency formatting of cost values

- perCpu – Cost per hour of a single cpu

- storageAtRest – Storage cost per gigabyte per month

- storageReads – Cost per 10,000 read operations

- storageWrites – Cost per 10,000 write operations

Helm configuration

The default values.yaml configuration file is configured with the following cost parameters in the global/cost section:

cost: currencyCode: USD perCpu: 0.126 storageAtRest: 0.0135 storageReads: 0.0485 storageWrites: 0.0038

The currencyCode attribute should be configured with the ISO 4217 country code. (The platform defaults to USD if the currency code is missing).

The perCpu from the global/cost section applies to every component that has not been configured with its own perCpu value. A perCpu value specific to a component may be set by adding a cost/perCPU attribute under that component section:

dali: - name: mydali cost: perCpu: 0.24

The Thor components support additional cost parameters which are used for the job guillotine feature:

- limit attribute

Sets the “soft” cost limit that a workunit may incur. The limit is “soft” in that it may be overridden by the maxCost ECL option. A node will be terminated if it exceeds its maxCost value (if set) or the limit attribute value (if the maxCost not set). - hardlimit attribute

Sets the absolute maximum cost limit, a limit that may not be overridden by setting the ECL option. The maxCost value exceeding the hardlimit will be ignored.

thor: - name: thor prefix: thor numWorkers: 2 maxJobs: 4 maxGraphs: 2 cost: limit: 10.00 # maximum cost is $10, overridable with maxCost option hardlimit: 20.00 # maximum cost is $20, cannot be overridden

The storage cost parameters (storageAtRest, storageReads and storageWrites) may be added under the storage plane cost section to set cost parameters specific to the storage plane:

storage: planes: - name: dali storageClass: "" storageSize: 1Gi prefix: "/var/lib/HPCCSystems/dalistorage" pvc: mycluster-hpcc-dalistorage-pvc category: dali cost: storageAtRest: 0.01 storageReads: 0.001 storageWrites: 0.04

The storage cost parameters under the global section are only used if no cost parameters are specified on the storage plane.

Setting Configuration Parameters for the HPCC Systems Bare Metal Platform

The cost configuration may only be configured by editing the environment.xml (usually located in /etc/HPCCSystems). The cost parameters are not supported by and may not be amended using the configenv interface.

The cost parameters are provided as attributes in the “cost” child element located under the /Environment/Hardware section as shown in this example extract of an environment.xml file :

<Environment> <Hardware> <ComputerType … <Domain … <Computer … <Switch … <cost currencyCode="USD" perCpu="0.113" storageAtRest="0.0135" storageReads="0.0485" storageWrites="0.0038"/> </Harware>

More New Features and Improvements Coming Soon

There are significant opportunities for the HPCC Systems platform to help developers, managers and DevOps in managing costs in the cloud. The following new features are expected to be added during 2022:

- Cost information from Roxie

- Include the cost of spraying/despraying

- Historic file costs

- Support for cost tracking when using Azure hpc-cache

- Track the costs of compilation

- Report DALI storage costs

- Track costs from deleted work units

- Track costs from deleted files

- Accumulated cost by user

Cost calculation in HPCC Systems will evolve and improve to more closely reflect the actual costs incurred and reporting the cost information in a form that is useful and accessible to users is high priority. So, expect the presentation and reporting of the cost information to improve as more progress is made on this ongoing development project.

We welcome your feedback and suggestions about features and improvement you would find useful. Message us using our Community Forum or create a ticket using our Community Issue Tracker to share your thoughts.